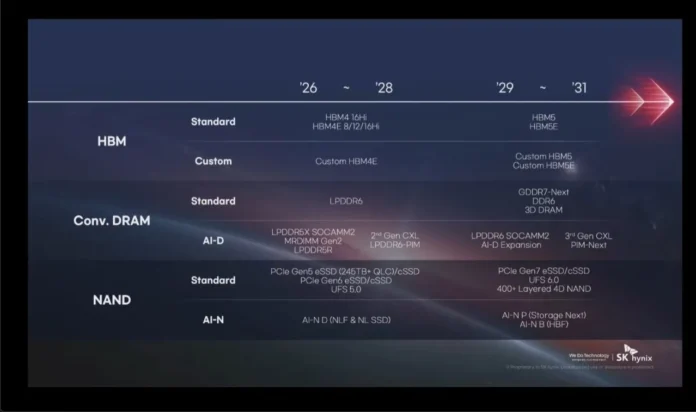

SK Hynix unveiled its next-generation memory roadmap at the SK AI Summit 2025, detailing emerging technologies that will define the future of computing by 2031.

The company’s presentation included innovations in HBM, DRAM, NAND, and AI-optimized memory solutions, highlighting HBM5, GDDR7-Next, DDR6, and PCIe Gen7 SSDs.

AI at the Heart of Future Memory Design

During its presentation in Seoul, SK Hynix demonstrated how artificial intelligence is transforming memory architecture. The roadmap divides development into two main phases: 2026-2028 and 2029-2031, covering both data center and consumer workloads.

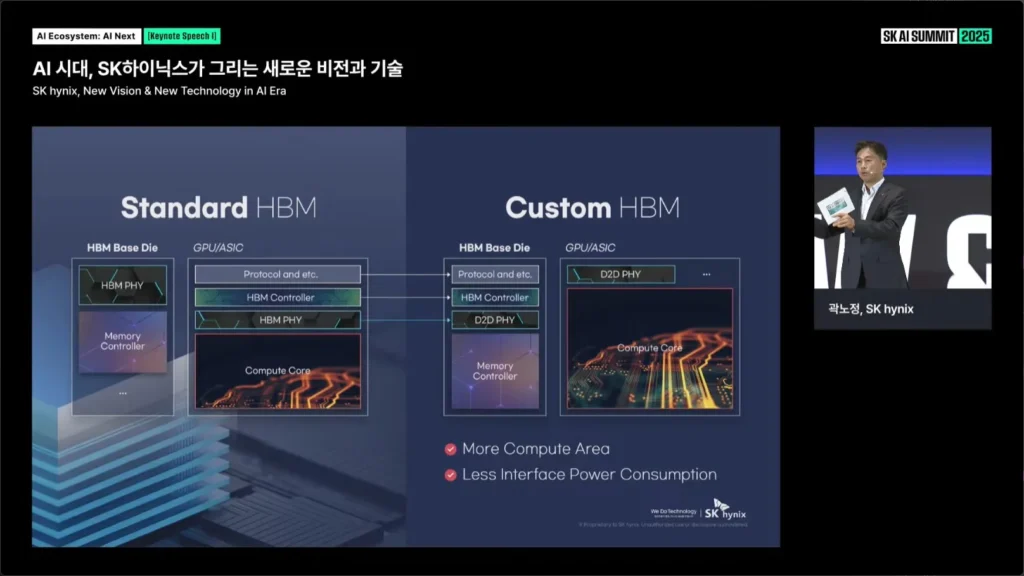

Company executives, led by CEO Kwak No-jung, emphasized an “AI-driven integration” strategy, positioning SK Hynix as a comprehensive AI-powered memory manufacturer. This includes custom memory design, on-chip processing, and close collaboration with companies like TSMC, NVIDIA, and SanDisk.

2026–2028: HBM4, LPDDR6, and PCIe Gen6 Storage

In the near term, SK hynix plans to release:

HBM4 with 16-Hi stacks and HBM4E variants (8, 12, and 16 layers), including custom HBM4E for specific AI accelerators.

LPDDR6 and AI-D (AI DRAM) modules like LPDDR5X SOCAMM2, MRDIMM Gen2, and CXL LPDDR6-PIM.

PCIe Gen5/6 eSSDs and cSSDs offering up to 245 TB+ capacities, alongside UFS 5.0 and early AI-N (AI NAND) products optimized for AI caching and inference.

This period marks the transition to AI-optimized DRAM and NAND, where memory becomes actively involved in managing computational efficiency rather than passively storing data.

2029–2031: The Era of GDDR7-Next, DDR6, and High-Bandwidth Flash

The long-term roadmap shifts focus to next-generation standards, including:

HBM5 and HBM5E, offering higher stacking density and lower latency for AI workloads.

GDDR7-Next, a future evolution of GDDR7 that is expected to exceed 48 Gbps bandwidth per pin, likely debuting in GPUs after 2029.

DDR6 and 3D DRAM, projected to replace DDR5 in mainstream PCs and servers by the end of the decade.

400+ Layer 4D NAND, UFS 6.0, and PCIe Gen7 SSDs, designed for next-gen AI storage demands.

The roadmap also introduces High-Bandwidth Flash (HBF), a new NAND-based technology designed to reduce latency in AI inference tasks. HBF is intended for systems where storage directly interacts with machine learning processors.

Also Read: What is DDR5 RAM? Everything you need to know

Inside SK hynix’s AI Memory Ecosystem

SK hynix’s roadmap also expands on its AI-D (AI DRAM) and AI-N (AI NAND) architectures, each divided into three classes:

| Category | Description | Focus |

|---|---|---|

| AI-D O (Optimization) | Low-power DRAM for efficient AI workloads | Cost reduction, stability |

| AI-D B (Breakthrough) | Ultra-high-capacity DRAM with flexible allocation | Performance scaling |

| AI-D E (Expansion) | Memory designed for robotics, mobility, and automation | Industry integration |

| AI-N P (Performance) | High-speed NAND for real-time inference | Latency reduction |

| AI-N B (Bandwidth) | High-bandwidth flash for AI servers | Data throughput |

| AI-N D (Density) | Dense NAND for large-scale AI datasets | Capacity and efficiency |

These developments highlight SK hynix’s intention to merge computation and memory – a significant shift that will define next-generation AI hardware platforms.

Partnerships and Industry Impact

According to reports from ZDNet and The Chosun Daily, SK hynix has intensified collaborations in the semiconductor ecosystem. The company is working with:

NVIDIA, on AI manufacturing and GPU-memory optimization,

TSMC, on custom HBM base dies, and

SanDisk, on standardizing high-bandwidth flash (HBF) for global deployment.

In addition, SK Group is accelerating its capital investments. The upcoming Yongin Semiconductor Cluster, expected to open in 2027, will have the equivalent production capacity of approximately 24 M15X fabs and will form the basis of the company’s AI-era production capacity.

Why SK hynix’s Roadmap Is Important

This roadmap demonstrates how memory technology is becoming the backbone of the AI revolution. Instead of focusing solely on faster CPUs or GPUs, companies like SK hynix are redesigning the way data moves and interacts within systems.

By 2030, technologies like GDDR7-Next, HBM5, DDR6, and HBF could reshape everything from data-center infrastructure to consumer AI devices. SK hynix’s long-term strategy positions it to challenge Samsung’s dominance and gain a key role in the AI-driven semiconductor future.

Source: SK hynix AI Summit 2025 (via @harukaze5719 on X)