NVIDIA’s China-focused RTX 6000D workstation GPU has appeared in its first public teardown, revealing an 84GB GDDR7 memory configuration and a reduced Blackwell core design that differentiates it from the flagship RTX PRO 6000. The findings offer the clearest look yet at how NVIDIA is segmenting high-end AI and compute hardware for the Chinese market while maintaining alignment with the broader RTX PRO 6000 Blackwell platform.

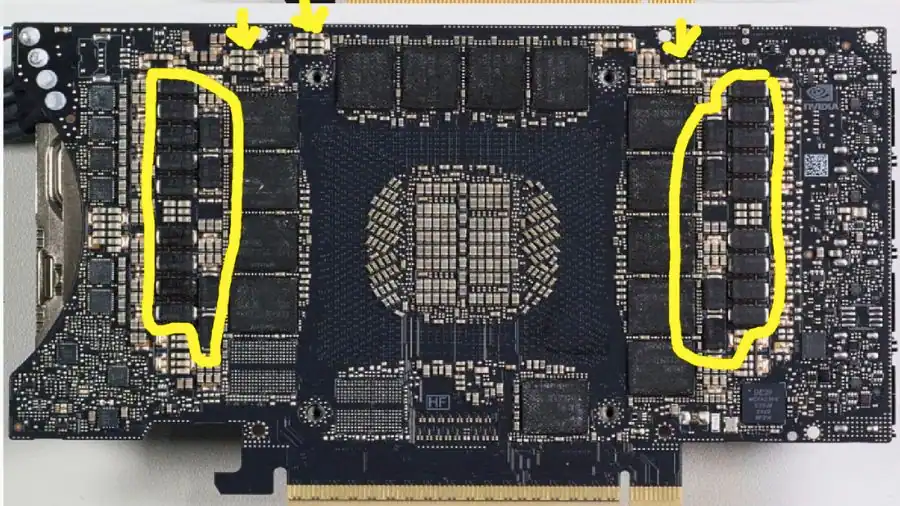

Images shared by UNIKO’s Hardware on X show the card labeled as “RTX 6000D 84G,” with 28 visible memory packages and the GPU marking GB202-891-KA-A1. The board carries 28 VRAM chips, each rated at 3GB, confirming the unusual 84GB total capacity. The use of 3GB GDDR7 modules enables NVIDIA to reach high aggregate memory capacity while reducing the total number of memory channels compared to the 96GB variant.

A more detailed teardown published by GINNSOD on Bilibili shows the same 28-chip layout surrounding a large GB202 die on a server-style PCB. The memory arrangement aligns with a 448-bit interface using 3GB GDDR7 modules, a departure from the 96GB, 512-bit configuration used on the full RTX PRO 6000 lineup. By narrowing the bus from 512-bit to 448-bit, NVIDIA trims peak memory bandwidth while keeping total VRAM relatively close to the flagship, suggesting deliberate segmentation rather than a drastic capacity reduction.

This configuration appears carefully calibrated. Memory capacity remains high enough to accommodate substantial AI models and large datasets, while reduced bandwidth and compute density scale back peak throughput. The result is a product tier that preserves enterprise AI deployment flexibility without matching the unrestricted global flagship in maximum performance metrics.

On the compute side, the RTX 6000D enables 156 streaming multiprocessors for a total of 19,968 CUDA cores, compared with 188 SMs and 24,064 cores on the RTX PRO 6000. That represents roughly a 17 percent reduction in enabled CUDA cores, reinforcing its positioning below the full PRO 6000 in raw parallel throughput. Developer listings referencing “RTX 6000D BSE (GB202-891)” confirm that this is a distinct silicon configuration rather than a simple branding variation.

While the reduction from 96GB to 84GB may appear modest, the shift from a 512-bit to 448-bit interface has broader implications for sustained throughput under AI and high-performance compute workloads. For large language model inference, memory capacity determines feasible model size, while bandwidth influences token generation speed and data movement efficiency. Retaining 84GB of GDDR7 ensures compatibility with substantial transformer-based models, while the narrower bus and reduced SM count cap peak tensor and data throughput, indicating intentional performance scaling rather than cost trimming.

Clock speeds also appear lower, with reports suggesting boost frequencies around 2430 MHz versus 2600 MHz on the full RTX PRO 6000. Early Geekbench 6 OpenCL results cited at approximately 390,656 points place it meaningfully behind the 96GB model, which typically scores in the 450,000 to 500,000 range. In AI-specific metrics, non-sparse FP4 compute throughput is said to drop from roughly 2015 on the PRO 6000 to about 1552 on the RTX 6000D, a reduction of around 23 percent. Combined with lower clocks and a narrower memory interface, this confirms that tensor performance and raw parallelism are deliberately moderated.

The card is shown with a passive thermal solution and no onboard fans, relying entirely on chassis airflow in server deployments. Although NVIDIA describes the RTX PRO 6000 Blackwell Server Edition as passive-capable, integrators such as GINNSOD are reportedly replacing the stock cooler with custom water blocks for dual-GPU workstation builds, adapting the server-focused design for quieter desktop AI systems.

Power delivery details indicate a 600W power limit, although observed draw appears closer to 400W in practical configurations. The PCB layout mirrors the PRO 6000 design, with additional filtering components on the rear of the board, pointing to electrical tuning adjustments rather than a complete platform redesign.

The RTX 6000D is already appearing in high-end prebuilt systems. One dual-GPU workstation equipped with two RTX 6000D units and an AMD Ryzen Threadripper PRO processor is listed at roughly $26,000, positioning the GPU squarely within enterprise AI, simulation, and professional compute deployments.

Also Read: Best RTX 5090 Laptops

Industry observers interpret such variants as part of NVIDIA’s broader strategy to maintain access to the Chinese professional and AI compute market while aligning hardware specifications with evolving U.S. export thresholds. Rather than removing large amounts of memory outright, the RTX 6000D reduces bandwidth, enabled SM count, clock speeds, and tensor throughput while preserving substantial VRAM capacity. In practical terms, it preserves deployment capability while narrowing peak performance headroom.

RTX 6000D vs RTX PRO 6000 Specifications

| Specification | RTX 6000D (China) | RTX PRO 6000 |

|---|---|---|

| Architecture | Blackwell (GB202-891) | Blackwell (GB202) |

| Streaming Multiprocessors | 156 SMs | 188 SMs |

| CUDA Cores | 19,968 | 24,064 |

| Memory Capacity | 84GB GDDR7 | 96GB GDDR7 (ECC) |

| Memory Modules | 28 × 3GB | 24 × 4GB |

| Memory Bus | 448-bit | 512-bit |

| Boost Clock | ~2430 MHz | ~2600 MHz |

| Power Limit | 600W | 600W |

| Cooling | Passive (Server-focused) | Workstation / Max-Q / Server variants |

With 3GB GDDR7 modules, a 448-bit bus, 156 enabled SMs, and moderated tensor throughput, the RTX 6000D represents a distinct branch of the RTX PRO 6000 family rather than a rebadged SKU. The teardown provides concrete evidence of how NVIDIA is tailoring its highest-end Blackwell workstation GPUs for specific markets while retaining architectural continuity with the GB202 platform.

Source: Bilibili (In Chinese)