Most laptops are not built for deep learning or machine learning work, even in 2026. Marketing terms like “AI-ready” or “Copilot PC” don’t change the reality that serious ML workloads still depend on GPU power, memory bandwidth, and cooling that many thin laptops simply can’t sustain.

Local work matters more than people expect. Cloud platforms such as Google Colab are useful for quick tests, but session limits, queue times, and recurring costs make them unreliable for day-to-day development. Anyone training models, working with CUDA-based frameworks, or handling large datasets eventually runs into those limits.

This is why gaming-class laptops dominate this space. Dedicated NVIDIA GPUs, higher overall power, and excellent thermal designs make a significant difference when workloads run for more than a few minutes. The CPU choice matters, but GPU performance and VRAM capacity typically become the primary bottleneck.

The laptops selected here are based on how well they handle real-world machine learning and deep learning workloads today, not on branding or feature checklists.

Some are suited for heavy local training, while others are ideal for students and developers learning AI on their own machines.

Overview: TOP 3 Best Laptops for ML, Deep Learning, and AI

| Image | Product | Feature | Price |

|---|---|---|---|

|

Alienware 18 Area-51 |

| Check Price |

|

ASUS ROG Strix G16 |

| Check Price |

|

Alienware 16 Aurora |

| Check Price |

Recent Updates

January 16, 2026: I updated this article by adding Alienware 16 Aurora and Lenovo Legion 5i to reflect newer and more powerful options for 2026. I replaced older models by updating Apple MacBook Pro M4 with M5 and switching Microsoft Surface Pro 9 to Surface Pro 11. I also removed Acer Swift 14 AI because it is no longer widely available and does not offer good value in 2026.

October 16, 2021: This article was first published.

What to Look for in a Laptop for Deep Learning and AI

Hardware choice matters more for machine learning and deep learning than it does for most other workloads. Training models locally puts sustained pressure on the GPU, memory, and cooling system, and weaknesses in any of these areas show up quickly once workloads run longer than a few minutes.

Rather than focusing on marketing labels or raw spec numbers, it’s more useful to understand where real bottlenecks appear.

GPU Comes First – and VRAM Matters More Than You Think

For most deep learning frameworks, the GPU becomes the limiting factor long before the CPU does. CUDA-based acceleration is still essential for tools like TensorFlow and PyTorch, making a dedicated NVIDIA GPU practically mandatory for serious local work.

Integrated graphics, regardless of generation, are unsuitable for model training and should only be used for learning, inference, or general development tasks.

VRAM capacity is often more important than the raw GPU model number. An RTX 4070 with limited VRAM can hit memory limits surprisingly quickly when working with large models or larger batch sizes.

Practically speaking, 8GB of VRAM is only sufficient for small models and learning projects. For consistent local training without frequently encountering memory limits, 12GB should be considered the practical minimum, while 16GB or more provides significantly more headroom.

NPU and “AI Laptop” Branding: What Actually Matters

Many modern laptops advertise built-in NPUs or “AI acceleration,” but these are designed for lightweight inference tasks such as background effects, voice processing, or on-device assistants. They do not replace a discrete GPU for training machine learning models.

For frameworks like TensorFlow and PyTorch, CUDA-enabled NVIDIA GPUs remain the practical requirement for local training. NPUs can complement certain workflows, but they should not be the deciding factor when choosing a deep learning laptop.

CPU: Important, but Not the Bottleneck

Modern multi-core CPUs are fast enough that they rarely limit machine learning workloads on their own. Any recent high-performance Intel Core i7, i9, or Core Ultra processor, as well as AMD Ryzen 7 or Ryzen 9 chips from recent generations, is sufficient for most users.

The choice of CPU is more important for data preprocessing, compilation, and multitasking, but once training begins, the GPU’s capabilities dominate the performance.

Memory: 16GB Is the Baseline, Not the Target

Machine learning workloads consume memory quickly, especially when working with multiple datasets, notebooks, or virtual environments. While 16GB of RAM can work for learning and smaller projects, it becomes restrictive faster than most people expect.

For smoother workflows and fewer slowdowns, 32GB of RAM is a far more practical target in 2026. Upgradability is also worth checking, as some laptops lock memory at purchase.

GPU performance and VRAM capacity cannot be upgraded later, which makes the initial GPU choice especially important. Memory and storage are sometimes upgradeable, but this varies by model and should be verified before purchase.

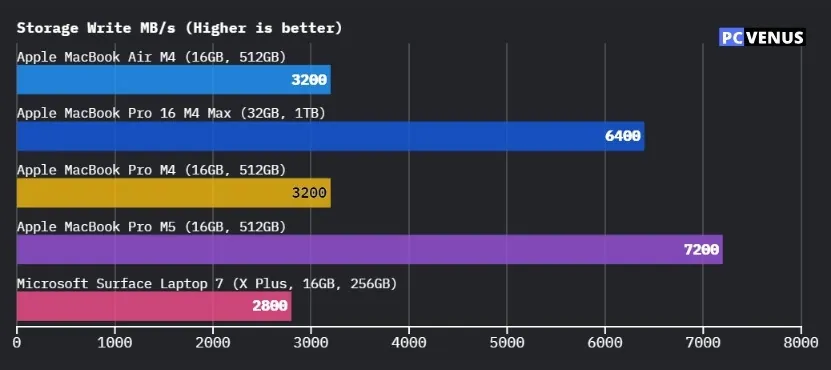

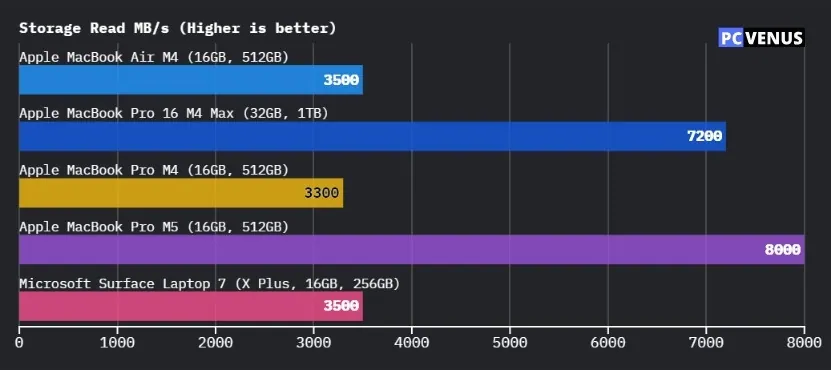

Storage: Fast SSDs Matter More Than Capacity Numbers

Large datasets, model checkpoints, and development environments quickly consume storage space. Smaller SSDs fill up rapidly, requiring frequent manual cleanup and disrupting workflows. For most deep learning and AI tasks, at least 512GB of SSD storage is practical, while 1TB or more provides better long-term flexibility and future-proofing.

Storage speed is just as important as capacity. NVMe SSDs significantly reduce loading times for large datasets, virtual environments, and project files. With frequent training runs and experiments, this time savings adds up and noticeably improves daily productivity.

Cooling and Sustained Power Are Often Overlooked

Many thin and lightweight laptops appear powerful on paper, but they struggle to maintain performance under sustained workloads. Deep learning, machine learning, and AI development place continuous heavy stress on the CPU and GPU, which often leads to thermal throttling in slim designs.

Laptops with larger cooling systems and higher power limits can hold stable performance for much longer periods, especially during extended training sessions. This is a key reason gaming-class laptops continue to be widely used for AI workloads, even though they are heavier and less portable.

Software and Platform Compatibility

Most deep learning and AI workflows rely on CUDA-based tools, making Windows and Linux laptops with NVIDIA GPUs the most flexible and widely supported option. These platforms offer superior compatibility with popular frameworks, libraries, and GPU-accelerated training pipelines.

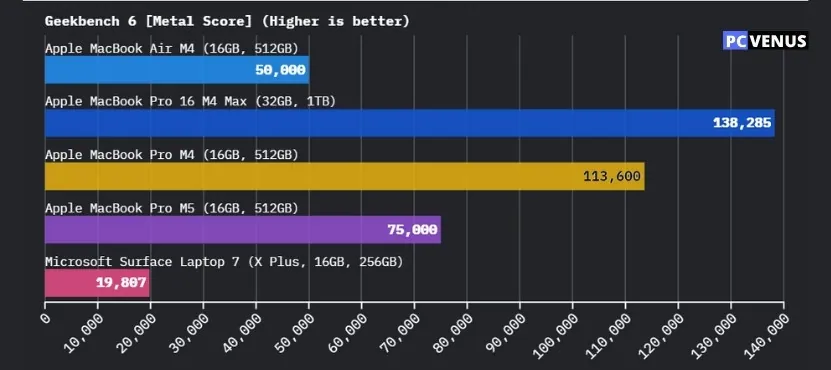

macOS systems are excellent for coding, experimentation, and light development tasks. However, they are limited for GPU-accelerated training workloads due to the lack of CUDA support, which restricts performance for large-scale model training.

| Tool / Platform | CPU (Minimum) | RAM (Minimum) | GPU Requirement | VRAM (Min / Rec) | OS Support |

| PyTorch | Modern CPU (AVX2 Support) | 8GB (16GB Rec.) | NVIDIA (CUDA 3.7+) OR Apple Silicon (MPS) | 4GB / 8GB+ | Windows / Linux / macOS |

| TensorFlow | Modern CPU (AVX Support) | 8GB (16GB Rec.) | NVIDIA (CUDA + cuDNN) OR Apple Silicon (Metal) | 4GB / 8GB+ | Windows / Linux / macOS |

| CUDA Toolkit | x86_64 Architecture | 8GB | NVIDIA GPU (Compute Capability 5.0+) | 4GB / 8GB+ | Windows / Linux (WSL2) |

| Jupyter Notebook | Any Modern Dual-Core | 4GB (8GB Rec.) | Optional (Depends on libraries used) | N/A | Win / Lin / macOS |

| Inference Only | Modern Quad-Core | 8GB | Optional (Integrated Graphics okay) | N/A | Win / Lin / macOS |

| Cloud Training | Any Basic CPU | 4GB+ | Provided by Cloud (T4/A100/H100) | — | Any (via Browser) |

The Best Laptops for Deep Learning, Machine Learning, and AI

Not all laptops in this guide are designed for the same level of AI workload. Some are capable of sustained local training, while others are better suited for learning, experimentation, or development work.

To keep comparisons practical, the laptops are grouped by performance tier.

Premium Picks (For Heavy AI and Deep Learning Workloads)

- Alienware 18 Area-51 — Best for large-scale local AI training

- Lenovo Legion Pro 7i Gen 10 — Best workstation-class Windows laptop for ML

- ASUS ROG Strix G16 — Best balance of cooling and sustained GPU performance

Mid-Range Picks (For Local Development and Moderate Training)

- Alienware 16 Aurora — Best laptop for AI students and beginners

- MSI Katana A17 AI — Best AMD-powered option for ML workloads

- Lenovo Legion 5i — Best for AI Learning

- Acer Nitro V — Best budget-friendly NVIDIA GPU laptop for ML

- ASUS TUF Gaming A14 — Best compact laptop for AI development and study

Learning & Development Pick (Not for Heavy Training)

- Apple MacBook Pro M5 — Best macOS laptop for AI researcher

- Microsoft Surface Pro 11 — Best for AI tools, learning, and everyday work

- ASUS Vivobook 14 — Most affordable entry-level laptop with AI

How We Selected and Tested These Laptops

We selected these laptops based on real machine learning and AI workloads rather than specifications. The focus was on how each system behaves during sustained use, not short benchmark runs.

During testing, we evaluated GPU performance, VRAM limits, memory usage, and thermal behavior while running common ML tasks, notebooks, and training workloads. We also paid close attention to stability, fan noise under load, and whether performance dropped during longer sessions.

Benchmark tools were used where helpful, but final recommendations are based on practical use and consistency over time. Laptops that struggled with heat, memory limits, or unstable performance were excluded, even if their specifications looked good on paper.

View our full laptop testing and review process

Premium Picks (For Heavy AI & Deep Learning Workloads)

| Laptop’s Model | GPU (VRAM) | CPU | RAM | Storage | Display | Weight | Best For |

|---|---|---|---|---|---|---|---|

| Alienware 18 Area-51 | NVIDIA GeForce RTX 5080 (16GB VRAM) | Intel Core Ultra 9 275HX | 32GB DDR5 | 2TB SSD | 18-inch WQXGA, 300Hz | 4.34 kg (9.57 lb) | Large models, long training runs, maximum thermal headroom |

| Lenovo Legion Pro 7i Gen 10 | NVIDIA GeForce RTX 5080 (16GB VRAM) | Intel Core Ultra 9 275HX | 64GB DDR5 | 2TB SSD | 16-inch WQXGA, 240Hz | 2.64 kg (5.83 lb) | Workstation-class ML workloads, memory-heavy training |

| ASUS ROG Strix G16 | NVIDIA GeForce RTX 5070 Ti (12GB VRAM) | Intel Core Ultra 9 275HX | 32GB DDR5 | 1TB SSD | 16-inch QHD, 240Hz | 2.50 kg (5.51 lb) | Balanced high-end performance with strong sustained cooling |

Best Laptop for Heavy AI and Deep Learning Workloads

1. Alienware 18 Area-51

Key Specs

| Processor | Intel Core Ultra 9 275HX |

| Graphics | NVIDIA RTX 5080 |

| Memory | 32GB DDR5 |

| Storage | 2TB SSD |

| Display | 18-inch WQXGA, 300Hz |

| Weight | 9.57 lb (4.34 kg) |

Check Current Pricing

If you plan to train models on your own pc, the Alienware 18 Area-51 feels less like a laptop and more like a portable workstation. It is built for users who care about steady GPU power, not battery life or easy portability.

This laptop is best for researchers, engineers, and advanced users who run CUDA-based workloads, train larger models locally, or work with big datasets. Compared to thinner laptops like the Dell XPS series, it gives up portability in exchange for stronger cooling and more stable performance during long AI tasks.

Performance

CPU performance is not a concern here. The system comes with Intel’s Core Ultra 9 275HX, a high-core processor that easily handles data preparation, compiling code, and multitasking while the GPU is under load.

The main reason to choose this laptop is the GPU. Alienware uses an NVIDIA GeForce RTX 5080 with 16GB of GDDR7 VRAM. This extra VRAM helps a lot once models grow larger or batch sizes increase. GPUs with less memory often hit limits much earlier during training.

Frameworks like TensorFlow and PyTorch mainly depend on the GPU, and that’s where this laptop performs best. The built-in NPU is useful for small on-device AI features, but serious training work is handled by the RTX GPU.

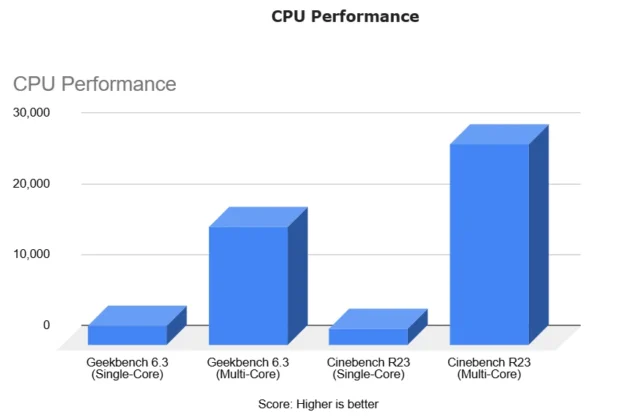

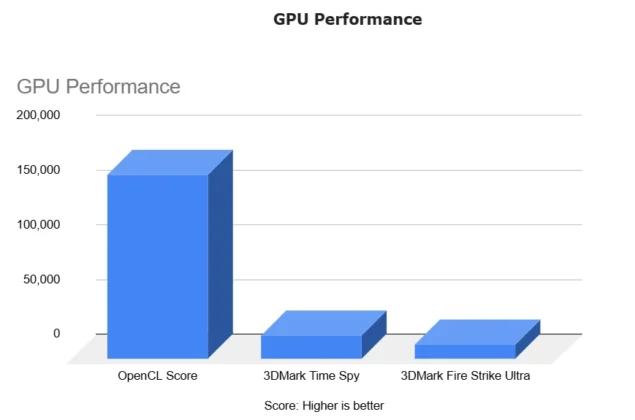

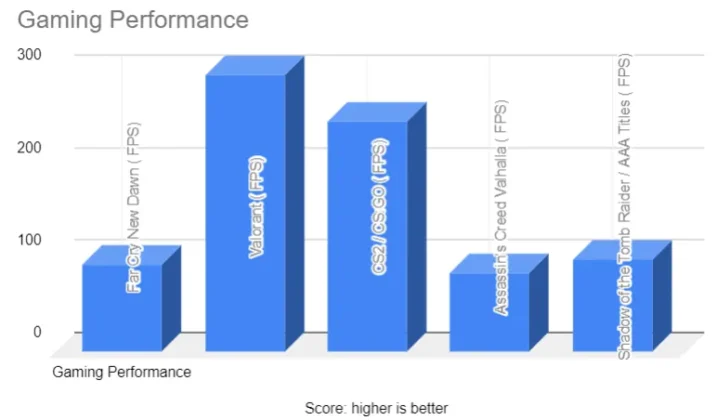

Benchmark results are in line with other high-end HX-class systems. In real use, GPU strength and VRAM size matter far more than CPU scores, and this system is clearly built around that idea.

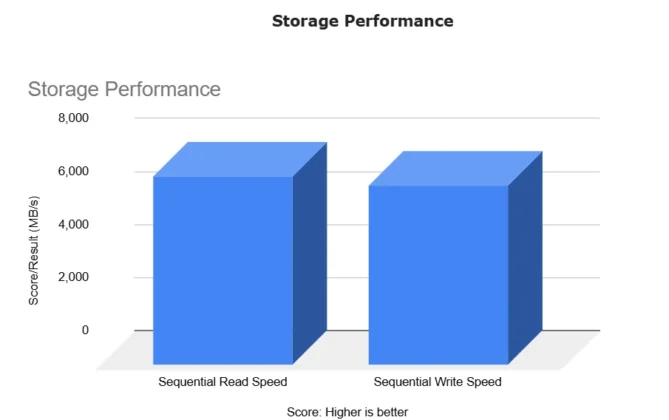

Memory and storage are well suited for AI work. With 32GB of DDR5 RAM and a fast 2TB PCIe Gen 5 SSD, large datasets, environments, and checkpoints load quickly, which makes daily work smoother.

Display and Design

The 18-inch WQXGA display gives plenty of space for code, charts, and dashboards. The high refresh rate also makes scrolling and window movement feel smoother during long sessions.

Alienware’s cooling design slightly raises the laptop to improve airflow. This helps the system keep higher power levels for longer periods. The transparent panel and RGB lighting give it a bold look, which may or may not appeal to everyone.

At 4.34 kg (9.57 lb), this is a very heavy laptop. It is not meant to be carried around often and works best as a desk-based system.

Cooling and Audio

Cooling is one of this laptop’s strongest points. During heavy ML or GPU workloads, the system is built to keep temperatures under control without quickly slowing down performance.

Under sustained load:

- Fan noise becomes clearly audible

- Temperatures stay within safe operating limits for high-power GPUs

This is normal behavior for laptops in this performance class. Thinner systems often struggle more under similar workloads.

Speaker quality is good for calls, videos, and general use, but audio is not a focus for a machine designed mainly for compute-heavy work.

Battery

Battery life is limited, as expected. For light tasks like browsing or basic coding, you can expect around 3 to 4 hours of use.

During machine learning or deep learning tasks that use the GPU heavily, battery life drops quickly. In practical terms, expect well under 2 hours, and often much less, depending on workload. For AI training, this laptop is designed to be used while plugged in.

The included 360W power adapter provides stable power, which is necessary to maintain full performance during long training runs.

Benchmark/Test

Pros

✔ Strong GPU performance with 16GB VRAM for larger models

✔ Cooling designed for long training sessions

✔ Large display improves productivity and visualization

✔ Fast PCIe Gen 5 storage for big datasets

✔ Desktop-like performance in a portable form

Cons

✘ Very heavy and not easy to carry

✘ Short battery life during AI workloads

✘ Expensive and aimed at advanced users

Best Workstation-Class Laptop for AI and Deep Learning

2. Lenovo Legion Pro 7i Gen 10

Key Specs

| Processor | Intel Core Ultra 9 275HX |

| Graphics | NVIDIA RTX 5080 |

| Memory | 64GB DDR5 |

| Storage | 2TB SSD |

| Display | 16-inch WQXGA, 240Hz |

| Weight | 5.83 lb (2.64 kg) |

Check Current Pricing

The Lenovo Legion Pro 7i Gen 10 is designed for users who need reliable, workstation-level performance for AI and deep learning, without resorting to a bulky 18-inch desktop replacement laptop.

It’s ideal for researchers, advanced students, and AI engineers who regularly work with large datasets, GPU-accelerated training, and heavy multitasking, where stability during long sessions is more important than portability or battery life.

Performance

The Core Ultra 9 275HX is a 24-core HX-class processor that handles data preprocessing, compilation, and parallel workloads without slowing down GPU-focused training tasks. During extended workloads, the CPU does not become a limiting factor when the GPU is under sustained load.

The NVIDIA GeForce RTX 5080 provides enough GPU performance and VRAM headroom for demanding deep learning workflows. In practical use, this allows larger batch sizes and longer training runs in frameworks like TensorFlow and PyTorch without frequent memory limits or interruptions.

With 64GB of DDR5 RAM and a 2TB NVMe SSD configuration, the system comfortably handles large datasets, multiple environments, and frequent experimentation. Storage and memory constraints are rarely an issue during day-to-day AI development work.

Display and Design

The 16-inch WQXGA display offers sufficient workspace for code editors, logs, and monitoring dashboards without making the laptop unnecessarily large. The high refresh rate makes scrolling through long files and visual outputs noticeably smoother during extended work sessions.

The chassis is built around performance rather than thinness. While heavier than standard productivity laptops, the extra size allows better thermal headroom during long CPU and GPU workloads.

Cooling and Audio

Thermal performance is a strong point of the Legion Pro 7i Gen 10. The multi-fan cooling system and advanced thermal design are tuned for sustained workloads rather than short performance bursts.

Fan noise increases during heavy training runs, which is expected at this power level, but performance remains stable over time without sudden throttling. This makes it suitable for long AI training sessions where consistency matters.

Audio quality is adequate for calls and media, but it is not a focus for a performance-oriented system like this.

Battery

Battery life is limited when running demanding workloads. Light tasks such as browsing or basic coding last 3-4 hours, but GPU-intensive AI tasks drain the battery quickly.

For machine learning and deep learning work, the laptop is intended to be used while plugged in to maintain consistent performance.

Why It Works Well for AI and Deep Learning

The Legion Pro 7i Gen 10 delivers strong GPU performance, high memory capacity, and stable thermals in a form factor that is easier to manage than larger 18-inch systems. It is well-suited for users who need dependable local performance for training and experimentation without committing to a full desktop replacement.

Pros

✔ RTX GPU handles demanding AI workloads

✔ 64GB RAM supports large datasets

✔ Stable cooling under long training sessions

✔ Large, smooth display for productivity

✔ Good port selection for peripherals

Cons

✘ Heavier than typical work laptops

✘ Short battery life during AI tasks

✘ Expensive for casual users

Benchmark/Test

Want full benchmarks and detailed testing?: Read our complete Lenovo Legion Pro 7i Gen 10 review

Best High-Performance Laptop for AI & ML

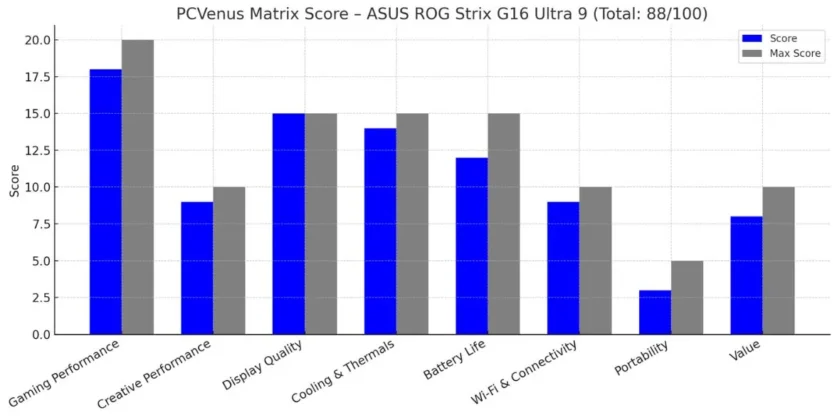

3. ASUS ROG Strix G16

Key Specs

| Processor | Intel Core Ultra 9 275HX |

| Graphics | NVIDIA RTX 5070 Ti |

| Memory | 32GB DDR5 |

| Storage | 1TB SSD |

| Display | 16-inch QHD, 240Hz |

| Weight | 5.51 lb (2.50 kg) |

Check Current Pricing

The ASUS ROG Strix G16 is a strong choice for users who want high AI and machine learning performance without moving to extremely large and heavy workstation laptops. It offers a balanced mix of power, cooling, and everyday usability.

This laptop works well for AI developers, advanced students, and professionals who train models locally, run CUDA-based workloads, or handle GPU-accelerated tasks for long periods.

Performance

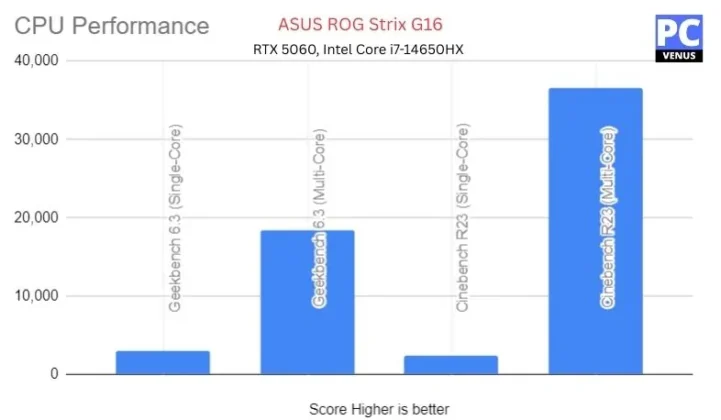

CPU performance is handled by Intel’s Core Ultra 9 275HX, a 24-core HX-class processor that manages data preparation, multitasking, and development work efficiently while the GPU is under sustained load.

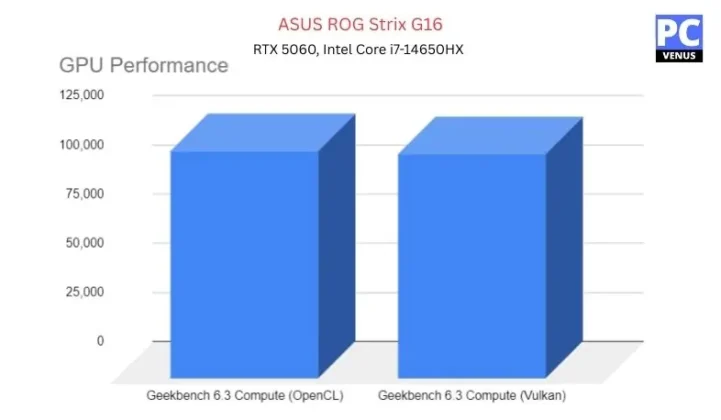

The key advantage here is the NVIDIA GeForce RTX 5070 Ti with 12GB of VRAM. This GPU provides enough memory and compute power for many machine learning and deep learning workloads, including medium to large models and higher batch sizes. Compared to GPUs with lower VRAM, it allows more headroom before memory limits become a constraint.

Frameworks such as TensorFlow and PyTorch rely heavily on the discrete GPU, and this system is built around that requirement. While it does not match the VRAM capacity of flagship GPUs, it delivers consistent performance for most real-world AI workloads, especially during longer training sessions.

This GPU also benefits from the Strix G16’s higher power limits and cooling design, which helps maintain stable performance during extended workloads.

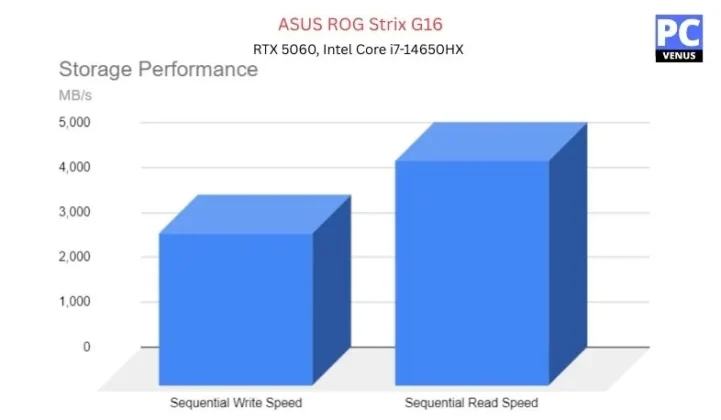

The laptop comes with 32GB of DDR5 RAM and a 1TB PCIe SSD, which is sufficient for working with large datasets, multiple environments, and frequent experimentation. Storage and memory performance remain responsive during day-to-day development work.

Display and Design

The 16-inch QHD display offers a good balance between screen size and portability. The 240 hz high refresh rate makes scrolling and window movement smooth, which helps during long coding or analysis sessions.

Color accuracy is strong, which is useful when working with visualizations, charts, or image-based models alongside development tasks.

The overall design is performance-focused. While the laptop is not slim, the extra size allows for better cooling and higher power limits than thinner systems.

Cooling and Audio

ASUS uses an advanced thermal design with liquid metal and multiple fans to keep temperatures under control during extended workloads.

Under heavy GPU and CPU use, fan noise becomes noticeable, but performance remains stable without sudden drops or throttling. Many thinner laptops throttle under similar sustained loads, which makes cooling a clear advantage here.

Speaker quality is good for calls, videos, and general use, though audio is not a main focus for a performance-oriented laptop.

Battery

Battery life is limited when performance is the priority. Light tasks such as browsing or basic coding can last 3-4 hours, but AI and GPU-heavy workloads drain the battery quickly and last 1.5-3 hours.

For machine learning and deep learning work, the Strix G16 is best used while plugged in to maintain consistent performance. This trade-off is typical for performance-focused AI laptops.

Why It Works Well for AI and Machine Learning

The ASUS ROG Strix G16 offers a strong balance between power and practicality. Compared to budget RTX 4050 or RTX 4060 laptops, it delivers better cooling and more consistent performance if you run heavy tasks.

Compared to larger workstation-class systems like Alienware 18 Area 51, it is easier to manage while still providing serious GPU capability.

Pros

✔ RTX GPU handles demanding AI workloads

✔ Strong cooling for long training sessions

✔ Smooth display for coding and visualization

✔ Fast performance for daily development

✔ Solid build quality

Cons

✘ Bulky compared to thin productivity laptops

✘ Battery life drops quickly under AI workloads

✘ Pricing may be high for casual users

Read our ASUS ROG Strix G16 Gaming Laptop Review

Mid-Range Picks

| Laptop Model | CPU | GPU (VRAM) | RAM | Storage | Display | Weight | Best For |

| Alienware 16 Aurora | Intel Core 7 240H | NVIDIA GeForce RTX 5050 (8GB) | 16GB DDR5 | 1TB SSD | 16″ WQXGA, 120Hz | 5.49 lbs (2.49 kg) | Entry-level RTX 50-series AI workloads & development |

| Lenovo Legion 5i | Intel Core i7-13650HX | NVIDIA GeForce RTX 5050 (8GB) | 16GB DDR5 | 512GB SSD | 15.3 WUXGA, 120Hz | 4.6 lbs (2.1 kg) | Best for learning ML, and AI development |

| MSI Katana A17 AI | AMD Ryzen 9 8945HS | NVIDIA GeForce RTX 4070 (8GB) | 16GB DDR5 | 2TB SSD | 17.3″ QHD, 240Hz | 5.95 lbs (2.70 kg) | Moderate local training, CUDA-based ML workloads |

| Acer Nitro V 16 | AMD Ryzen 7 8845HS | NVIDIA GeForce RTX 4060 (8GB) | 16GB DDR5 | 1TB SSD | 16″ WUXGA, 165Hz | 5.51 lbs (2.50 kg) | Budget-friendly ML development & experimentation |

| ASUS TUF Gaming A14 | AMD Ryzen 7 8845HS | NVIDIA GeForce RTX 4050 (6GB) | 16GB LPDDR5X | 512GB SSD | 14″ WQXGA, 165Hz | 3.22 lbs (1.46 kg) | Portable ML learning, coding, and light training |

Best for Beginners

1. Alienware 16 Aurora

Key Specs

| Processor | Intel Core Ultra 7 240H |

| Graphics | NVIDIA RTX 5050 |

| Memory | 16GB DDR5 |

| Storage | 1TB SSD |

| Display | 16-inch WQXGA, 120Hz |

| Weight | 5.49 lb (2.49 kg) |

Check Current Pricing

The Alienware 16 Aurora we have picked for the users who want access to NVIDIA’s RTX 50-series graphics without moving to large, heavy workstation-class laptops. It focuses on practical performance, modern connectivity, and a more portable design.

This laptop is best suited for students, beginners, and developers who are learning machine learning, experimenting with AI models, or running GPU-accelerated workloads that do not require large VRAM capacity or long training sessions.

Performance

The system uses Intel’s Core Ultra 7-240H processor, a modern 10-core CPU that handles everyday development tasks such as coding, data preparation, and multitasking without issues. For AI learning and experimentation workflows, the CPU does not become a limiting factor.

The main advantage here is the NVIDIA GeForce RTX 5050 with 8GB of GDDR7 VRAM at an affordable price. This GPU supports CUDA and modern AI features, making it suitable for learning machine learning frameworks, running inference, and training smaller models. Compared to integrated graphics or older entry-level GPUs, it provides a noticeable improvement in capability.

Frameworks such as TensorFlow and PyTorch can take advantage of the discrete GPU for acceleration, but the 8GB VRAM capacity limits its use for large models or extended training runs. It is best suited for experimentation, coursework, and development work rather than full-scale research training.

The GPU operates within conservative power limits, which helps maintain stable performance during typical learning and development workloads.

The laptop includes 16GB of DDR5 RAM running at 5600 MT/s and a 1TB NVMe SSD. This setup is sufficient for datasets, environments, and project files, and the memory can be upgraded up to 32GB as workloads grow.

Display and Design

The 16-inch WQXGA display offers a sharp resolution and a 120Hz refresh rate. It provides enough space for code editors, notebooks, and visualizations, while the matte finish helps reduce glare during long work sessions.

Alienware’s updated design places more emphasis on portability than its larger models. The absence of a rear thermal shelf makes the laptop easier to carry and fit into backpacks. At 5.49 lb (2.49 kg), it is noticeably more manageable than heavier AI-focused laptops.

Cooling and Audio

The Alienware 16 Aurora uses a redesigned Cryo-Chamber cooling system that directs airflow toward key components. This cooling setup is intended to keep performance stable during moderate sustained workloads rather than push extreme power levels.

Under moderate GPU and CPU loads, fan noise remains controlled. During heavier tasks, fans become more noticeable, which is expected for a performance laptop in this size class. Cooling behavior prioritizes consistency and component safety over peak performance bursts.

Audio quality is good for calls, videos, and general use, supported by stereo speakers with Dolby Audio. While audio is not a focus area, it is adequate for everyday needs.

Battery

The laptop includes a 96Wh battery, which is large for a 16-inch system. Light tasks such as browsing, writing code, or watching videos can last several hours.

When running GPU-accelerated AI or machine learning workloads, battery life drops quickly. For practical use, this laptop performs best when plugged in during AI-related tasks.

Connectivity and Ports

Connectivity is a strong point. The Alienware 16 Aurora supports Wi-Fi 7 and Bluetooth 5.4, making it well prepared for future wireless standards.

Port selection includes:

- HDMI 2.1 for external displays

- Multiple USB-A and USB-C ports

- Ethernet (RJ-45)

- Headphone jack

This makes it easy to connect external monitors, storage devices, and other peripherals commonly used in development workflows.

Why It Works Well for AI and Machine Learning

The Alienware 16 Aurora offers a practical way to get started with GPU-accelerated AI workloads. It provides modern RTX graphics, a high-resolution display, and good portability, without the size or cost of a workstation-class laptop.

It’s ideal for learning, experimenting, inference, and small training workloads where CUDA acceleration is beneficial but extreme GPU power isn’t required.

Pros

✔ RTX 5050 GPU supports CUDA-based AI workflows

✔ Capable CPU for development and multitasking

✔ Sharp 16-inch display with 120Hz refresh rate

✔ More portable than workstation-class laptops

✔ Strong connectivity with Wi-Fi 7 and multiple ports

Cons

✘ 8GB VRAM limits large model training

✘ Not suitable for long, heavy AI workloads

✘ Battery life drops quickly under GPU use

Best for Learning

2. Lenovo Legion 5i

Key Specs

| Processor | Intel Core i7-13650HX |

| Graphics | NVIDIA RTX 5050 |

| Memory | 16GB DDR5 |

| Storage | 512TB SSD |

| Display | 15.3-inch 2K WQXGA, 120Hz |

| Weight | 4.6 lbs (2.1 kg) |

Check Current Pricing

The Lenovo Legion 5i is a good choice for people who want to learn AI and machine learning on their own laptop without buying a very expensive, high-power system. It is not made for heavy research work, and it does not try to be one.

This laptop fits students and beginners who want to run AI tools on their laptop, see how models work, and build projects step by step before moving to cloud services.

Why the Legion 5i Is Good for Learning AI

When learning machine learning, speed is usually not the biggest problem.

What matters more is whether the laptop runs tools smoothly without crashing, overheating, or slowing down too fast.

The Legion 5i can handle common learning tasks like:

- Running Jupyter notebooks

- Training small to medium models

- Using TensorFlow and PyTorch

- Testing models on the laptop before using cloud platforms

This helps learners understand AI in a practical way instead of depending only on online tools.

Hardware That Supports Learning

The Intel Core i7 HX-series processor easily handles coding, preparing data, and using multiple tools at the same time. For learning and project work, the CPU is more than enough.

The NVIDIA GeForce RTX 5050 comes with 8GB of VRAM and CUDA support. This is important because most AI tools need an NVIDIA GPU to work properly.

This GPU works well for:

- Small AI models

- Testing results

- Fixing and improving models

It is not made for long or very large training jobs, but it works well for learning and practice.

Memory, Storage, and Upgrades

One strong point of the Legion 5i is that it can be upgraded later.

You can:

- Increase RAM up to 64GB

- Add or upgrade SSD storage as projects grow

This is useful because AI projects usually become bigger over time. There is no need to buy everything at the start.

Portability and Daily Use

Compared to large gaming or high-power laptops like ASUS ROG Strix G16 and Lenovo Legion 7i Gen 10, the Legion 5i is easier to carry and use every day. It works well for students going to class or people learning from different places.

It is a laptop that can be used daily, not only on a desk.

Cooling and Stability

Lenovo’s cooling system is built for long use, not short bursts. During long coding or training sessions, the laptop stays steady, heat is controlled, and performance does not drop suddenly.

For learning AI, steady performance is more important than speed test numbers.

Battery Expectations

Battery life is fine for light tasks like browsing, reading, or writing code. As expected, AI work uses more power and drains the battery faster.

For training or testing models, the laptop works best when plugged in.

Pros

✔ Good for learning AI on a personal laptop

✔ NVIDIA GPU with CUDA support

✔ RAM and storage can be upgraded

✔ Easy to carry for daily use

✔ Stable performance during long sessions

Cons

✘ Not suitable for large or long AI training

✘ 8GB VRAM limits advanced research work

✘ GPU is focused on learning, not maximum power

Best AMD-Powered Laptop for AI & ML

3. MSI Katana A17 AI

Key Specs

| Processor | AMD Ryzen 9 8945HS |

| Graphics | NVIDIA RTX 4070 |

| Memory | 16GB DDR5 |

| Storage | 2TB SSD |

| Display | 17.3-inch QHD, 240Hz |

| Weight | 5.95 lbs (2.70 kg) |

Check Current Pricing

The MSI Katana A17 AI is a powerful machine designed to handle the most demanding AI, deep learning, and ML workloads. Equipped with a powerful AMD Ryzen 9-8945HS processor that delivers exceptional AI acceleration, this laptop is an excellent choice for programmers, researchers, and AI engineers.

Equipped with NVIDIA’s GeForce RTX 4070 GPU, this laptop is blazingly fast, delivering ray-traced visuals and DLSS-driven performance boosts that are critical for AI model training, deep learning simulations, and advanced gaming. Whether you’re using TensorFlow, PyTorch, or OpenCV, this GPU ensures seamless AI tasks.

The 17.3-inch QHD display with a 240Hz refresh rate delivers crisp, color-accurate visuals, perfect for data visualization, 3D rendering, and deep learning model monitoring. The RGB backlit keyboard adds a modern touch and allows users to work even in dim environments, while the AI-powered Cooler Boost 5 cooling system keeps the laptop cool even under heavy computing loads.

One of the highlights of the Katana A17 AI is its 64GB DDR5 RAM and 2TB PCIe NVMe SSD, which ensure fast performance and ample storage for large AI datasets and multiple virtual environments. Built for advanced users, this laptop is capable of handling parallel computing tasks, machine learning training, and high-speed simulations without lag.

At 5.95 pounds (2.7 kg), this laptop is a bit heavy, but its solid construction and premium looks make it a solid choice for professionals who prioritize performance over portability. While it lacks a Thunderbolt port, it offers a variety of connectivity options, including Wi-Fi 6 and Bluetooth 5.2, ensuring fast data transfer speeds and seamless cloud computing access.

For anyone looking for a high-performance AI laptop, the MSI Katana A17 AI is undoubtedly one of the best choices in 2026, making it a top choice for deep learning, and machine learning research.

Pros

✔ AI-Powered Ryzen Processor

✔ RTX GPU for High-End AI & ML Processing

✔ Large DDR5 RAM for Multitasking

✔ Fast NVMe SSD for Storage

✔ High-Resolution Display for Crisp Visuals

✔ Cooler Boost 5 for Efficient Thermal Management

Cons

✘ No USB-A or HDMI ports (USB-C adapters required)

✘ Expensive compared to Windows alternatives

Best Budget Laptop for Machine Learning

4. Acer Nitro V

Key Specs

| Processor | AMD Ryzen 7 8845HS |

| Graphics | NVIDIA RTX 4060 |

| Memory | 16GB DDR5 |

| Storage | 1TB SSD |

| Display | 16-inch WUXGA, 165Hz |

| Weight | 5.51 lbs (2.50 kg) |

Check Current Pricing

The Acer Nitro V offers exceptional performance, display quality, and AI-enhanced features in the mid-range gaming laptop space.

Performance and AI integration

The core of the Nitro V 16 is powered by the AMD Ryzen 7 8845HS octa-core processor, which delivers fast performance for gaming, streaming, and productivity tasks. The addition of artificial intelligence features enhances functions such as noise reduction and image processing.

Graphics and Display

This laptop is equipped with the NVIDIA GeForce RTX 4060 GPU, which ensures seamless visuals and immersive gaming thanks to DLSS 3.5 technology. The 16-inch WUXGA IPS display has a 165Hz refresh rate, 100% sRGB color gamut, and a 16:10 aspect ratio, delivering vivid and seamless visuals for gaming and content creation.

Memory and Storage

The Nitro V16 comes with 16GB DDR5 RAM (expandable up to 32GB) and 1TB PCIe Gen 4 SSD, ensuring fast load times and ample storage for games and apps.

Cooling and Build

The dual-fan cooling system with quad-intake and quad-exhaust design maintains optimal temperatures during intense gaming sessions, and prevents thermal throttling. This laptop is a combination of durability and portability, weighing around 2.5kg.

Connectivity and Features

Connectivity options include Wi-Fi 6E, Gigabit Ethernet, USB 4, USB 3.2 Gen 2 ports, and HDMI 2.1, catering to various peripheral devices and high-speed network requirements. The addition of a dedicated Copilot key gives users AI assistance, improving productivity and user experience.

Pros

✔ Affordable Price

✔ Robust gaming performance

✔ High-refresh-rate display with accurate color reproduction

✔ Comprehensive connectivity options

✔ AI-enhanced features for improved user experience

✔ Advanced cooling system ensuring sustained performance

Cons

✘ Battery life may require frequent charging during heavy use

✘ Webcam limited to 720p resolution

Read Acer Nitro V 16: Full Specifications and Benchmarks

Best Compact AI Gaming Laptop for Students

5. ASUS TUF Gaming A14

Key Specs

| Processor | AMD Ryzen AI 7 8845HS |

| Graphics | NVIDIA RTX 4050 |

| Memory | 16GB LPDDR5X |

| Storage | 512GB SSD |

| Display | 14-inch, 2.5K WQXGA, 165Hz |

| Weight | 3.22 lbs (1.46 kg) |

Check Current Pricing

This is one of the most portable AI gaming laptops of 2026. It is powered by the AMD AI 7 8845HS CPU, which enables AI-native features such as Copilot+ PC calling, live subtitles, and AI artwork generation.

It has military-grade durability (MIL-STD-810H) and is equipped with dual 89-blade fans for excellent cooling performance. The RTX 4050 GPU and WQXGA 165Hz display make it ideal for training models, light gaming, and high-frame-rate visual rendering.

This laptop is equipped with Wi-Fi 6E, HDMI 2.1, and a Copilot key, making it a well-balanced AI laptop for professionals and mobile creators.

Who Should Buy This & Why: Ideal for students, content creators, or professionals needing a reliable and portable system for AI, ML, and creative tasks. Its AI Copilot+ features and high refresh screen make it versatile enough for work and play.

Why You Might Skip It: If you handle large datasets or require extensive local storage, the limited SSD space and lower-tier GPU might be a constraint.

Pros

✔ Compact and rugged design

✔ G-SYNC enabled high-res display

✔ Copilot+ AI capabilities

✔ Efficient cooling

✔ Reasonably priced

Cons

✘ 512GB storage may be limited

✘ No dedicated Thunderbolt port

Learning & Development Pick (Not for Heavy Training)

Note: These laptops are for learning, coding, and AI tools, not for training machine learning or deep learning models locally.

| Laptop Model | Processor | GPU / AI Hardware | RAM | Storage | Display | Weight | Best For |

| Apple MacBook Pro 14 | Apple M5 | 10-core GPU + 16-core Neural Engine | 16GB Unified | 512GB SSD | 14.2″ Liquid Retina XDR | 3.4 lbs (1.55 kg) | AI development, macOS workflows |

| Microsoft Surface Pro 11 | Snapdragon X Elite | Adreno GPU + Hexagon NPU (45 TOPS) | 16GB LPDDR5X | 512GB Gen4 SSD | 13″ PixelSense Flow OLED | 1.97 lbs (0.89 kg)* | Students, ultra-portable development |

| ASUS Vivobook 14 | AMD Ryzen 7 350 | AMD Radeon with XDNA NPU | 16GB DDR5 | 512GB SSD | 14” WUXGA, 60Hz | 3.22 lbs (1.46 kg) | Budget AI learning, entry-level ML |

Best macOS Laptop for AI Researchers

1. Apple MacBook Pro M5

Key Specs

| Processor | Apple M5 (10-Core) |

| Graphics | Apple M5 (10-Core) |

| Memory | 16GB LPDDR5X |

| Storage | 512GB SSD |

| Display | 14.2 inches, 3024 × 1964 pixels, 120Hz |

| Weight | 3.42 lbs (1.55 kg) |

Check Current Pricing

I used the MacBook Pro M5 as my main work laptop instead of treating it like a short test device. After long workdays, it became clear that this laptop is built for people who spend hours coding, testing ideas, and working inside macOS, not for heavy GPU-based model training.

For AI work, the MacBook Pro M5 fits best with tasks like testing models, preparing data, running AI tools, and using built-in AI features. Apple is very clear about this, and real use confirms it.

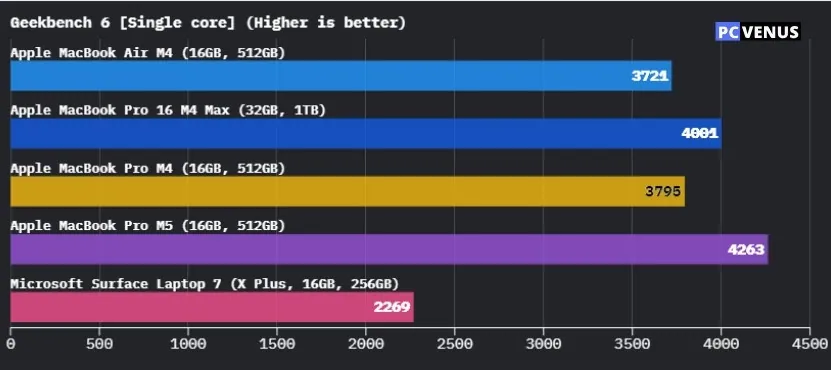

Real-World Performance for AI Work

In daily use, the Apple M5 chip felt steady rather than aggressively fast. Writing code, running notebooks, preparing datasets, and switching between many apps stayed smooth even after hours of continuous work.

The built-in Apple GPU and Neural Engine handled on-device AI features quietly in the background. Image analysis, background effects, and AI tools inside apps worked without sudden slowdowns or heat.

For TensorFlow and PyTorch work, I found the system suitable for testing ideas and running results, but not for large training jobs that depend on NVIDIA GPUs and CUDA. Those workloads still work better on Windows laptops or cloud GPUs.

What stood out most was stability. Performance did not drop over time, and the system stayed responsive during long sessions, which matters more than short speed tests in daily work.

Display, Comfort, and Daily Use

The 14.2-inch Liquid Retina XDR display is one of the strongest parts of the MacBook Pro M5. During long coding and writing sessions, text stayed clear and easy to read, and the 120Hz refresh rate made scrolling and window movement feel smoother than standard displays.

Brightness and color quality were excellent in real use, even in bright rooms. For people who spend many hours looking at the screen, this improves comfort and reduces eye strain.

The keyboard and trackpad remain among the best I’ve used on any laptop. Typing for long periods felt easy, and the trackpad stayed accurate for gesture-heavy work.

Battery Life and Heat (Real Use)

Battery life is one of the MacBook Pro M5’s biggest strengths. In everyday use, I consistently saw:

- About 14–17 hours for light work and development

- Up to 22 hours for video playback

- Around 6–9 hours during heavier tasks like editing and compiling

Even on battery power, performance stayed steady without sharp slowdowns.

Heat control was also good. During light work, the laptop stayed cool and silent. Under heavier tasks, fans became noticeable but never distracting, and temperatures stayed under control without sudden drops in performance.

Where the MacBook Pro M5 Makes Sense

For people working mainly on macOS, the MacBook Pro M5 is a strong choice for:

- Testing AI ideas

- Preparing data and running tools

- Using AI features inside creative and development apps

- Long workdays where battery life and quiet operation matter

It is not made for heavy deep learning training that needs CUDA or large amounts of VRAM. In those cases, cloud GPUs or high-end Windows laptops are still the better option.

Pros

✔ Excellent battery life for long workdays

✔ Quiet and steady performance over time

✔ Outstanding display quality

✔ Strong built-in AI features in macOS

✔ High-quality keyboard and trackpad

Cons

✘ Not suitable for heavy local model training

✘ No CUDA support

✘ Expensive compared to Windows learning laptops

Read our Apple MacBook Pro M5 Review

Best For AI Tools, Learning, and Everyday Work

2. Microsoft Surface Pro 11

Key Specs

| Processor | Snapdragon X Elite |

| Graphics | Adreno GPU + Hexagon NPU (45 TOPS) |

| Memory | 16GB LPDDR5X |

| Storage | 512GB SSD |

| Display | 13-inch, 120Hz |

| Weight | 1.97 lbs (0.89 kg) |

Check Current Pricing

I reviewed the Microsoft Surface Pro 11 as a daily-use device. My unit had a slightly different configuration, but the overall experience made it very clear what this device is built for.

Before going further, one thing needs to be clear: this is not a laptop for machine learning or deep learning training. It does not support NVIDIA CUDA and is not designed to train models on the device. Instead, it is made for learning, coding practice, and using AI-powered tools.

What the Surface Pro 11 Is Good At

The Surface Pro 11 follows Microsoft’s familiar two-in-one design. It works as both a tablet and a laptop, which makes it very light, easy to carry, and comfortable to use for long periods.

This design works especially well for:

- Students

- Online classes

- Note-taking and reading

- Office and everyday work

It is a device you can carry all day without feeling weighed down.

Performance in Daily Use

The laptop runs on the Snapdragon X Elite (12-core) processor and Windows 11 with Copilot+ features. In daily use, the system feels fast and smooth for:

- Browsing

- Document work

- Coding practice

- Media use

- Light creative tasks

Apps open quickly, and switching between tasks feels smooth.

The version listed here comes with 16GB RAM and 512GB storage, which is enough for learning, study, and general work.

However, it uses integrated Qualcomm Adreno graphics, which are not meant for gaming, heavy video editing, or GPU-based machine learning work.

Display and Comfort

The 13-inch OLED touchscreen is one of the strongest parts of the Surface Pro 11. The screen is sharp, bright, and easy on the eyes, which makes it great for reading, writing, and watching content.

Touch and pen support work very well. For users who like handwritten notes, drawing, or marking documents, this is a big advantage over traditional laptops.

Battery Life

Battery life is another strong point in this Surface 2-in-1 laptop. In my use, the Surface Pro 11 easily lasted a full workday (Around 12 hours) with normal tasks like browsing, writing, coding practice, and video calls.

This makes it a good choice for people who work away from a desk or move around a lot during the day.

Who Should Choose This Laptop

The Microsoft Surface Pro 11 makes the most sense for users who want:

- A very portable Windows device

- A quiet and cool system

- A good screen for reading and notes

- AI tools, coding practice, and study work

It fits best into learning and everyday development workflows rather than performance-heavy workloads.

Pros

✔ Very light and easy to carry

✔ Excellent OLED touchscreen

✔ Smooth performance for daily tasks

✔ Long battery life

✔ Flexible tablet and laptop design

Cons

✘ Not built for gaming or heavy GPU work

✘ Limited for demanding video editing

Read our Microsoft Surface Pro 11 Review

Most affordable AI laptop

3. ASUS Vivobook 14

Key Specs

| Processor | AMD Ryzen AI 7 350 |

| Graphics | AMD Radeon |

| Memory | 16GB DDR5 |

| Storage | 512GB SSD |

| Display | 14-inch WUXGA, 60Hz |

| Weight | 3.22 lbs (1.46 kg) |

Check Current Pricing

For users looking for a budget AI laptop, the ASUS Vivobook 14 Copilot+ PC delivers excellent value. Powered by AMD’s AI-dedicated Ryzen 7 350 processor with XDNA NPU (up to 50 TOPS), it enables Copilot+ AI features out of the box.

Its compact 14-inch chassis is paired with a 60Hz WUXGA display and backlit chiclet keyboard. The Radeon integrated graphics are not ideal for GPU-heavy training, but they’re sufficient for lightweight ML tasks, data analysis, and development.

Perfect for students and developers getting started with AI tools, it’s a low-cost entry into the next generation of computing.

Who Should Buy This & Why: This is perfect for students, beginner developers, or anyone exploring AI tools and basic ML workflows. It offers great battery life, portability, and Copilot+ features without breaking the bank.

Why You Might Skip It: If you need strong GPU acceleration or plan to train models with large datasets, this laptop’s integrated graphics will be a limiting factor.

Pros

✔ Affordable AI-ready laptop

✔ Copilot+ PC with Recall, Live Captions

✔ Fast Ryzen AI processor

✔ Great battery life

Cons

✘ Integrated GPU limits deep learning capabilities

✘ 60Hz refresh rate

Three Common AI Work Styles

Learning and Coursework

This category includes students and beginners who primarily work with notebooks, tutorials, and small datasets. Typical tasks involve Jupyter notebooks, introductory PyTorch or TensorFlow projects, and frequent use of cloud platforms such as Colab or Kaggle.

At this stage, system stability and reliability matter more than raw performance. A laptop that runs consistently without crashes or thermal issues is more useful than a powerful system that cannot sustain load.

Regular Local Training

This level applies to users who train models directly on their laptops using medium-sized datasets and longer experiments. Training runs often last hours rather than minutes, which quickly exposes hardware limits.

Memory constraints and thermal throttling become common issues here. Laptops that look capable on paper may slow down significantly under sustained workloads, making cooling and memory capacity critical factors.

Advanced or Research Work

Advanced users work with larger models, extended training sessions, and repeated tuning cycles. At this level, laptops are pushed close to their practical limits.

Sustained power delivery, strong cooling systems, and higher memory capacity matter far more than thin design or battery life. Many users at this level combine local work with cloud GPUs to handle heavier workloads efficiently.

Practical Hardware Levels (Based on Real Usage)

Rather than focusing on brand names or marketing labels, it helps to think in functional tiers based on how demanding your workloads are. Each level places different pressure on the CPU, GPU, memory, and cooling system.

1. Good: Learning, Coursework, and Small Projects

This level is suitable for students and beginners who are learning machine learning concepts and working on small projects.

At this stage:

- CPU: Any modern high-performance CPU is sufficient for notebooks, data preprocessing, and basic experimentation.

- GPU: A dedicated NVIDIA GPU is helpful but not strictly required if most training is done in the cloud.

- VRAM: 6–8GB is enough for small models and basic local experiments.

- RAM: 16GB is the practical minimum to avoid slowdowns when working with notebooks and datasets.

- Storage: A fast SSD with at least 512GB helps keep workflows smooth.

The focus here is stability and smooth learning rather than maximum performance.

2. Better: Regular Local Training

This level fits users who train models locally on a regular basis and work with medium-sized datasets.

At this level:

- CPU: Still important for data preparation and multitasking, but no longer the main performance limiter.

- GPU: A dedicated NVIDIA GPU with strong CUDA support becomes essential.

- VRAM: Around 12GB is far more comfortable and reduces frequent out-of-memory errors.

- RAM: 32GB significantly improves workflow stability when running multiple notebooks or experiments.

- Cooling: Sustained cooling performance starts to matter, as workloads often run for hours.

This tier balances performance and cost while avoiding most common bottlenecks.

3. Best: Heavy Training and Research-Level Work

This level is designed for advanced users working with large models, long training sessions, and repeated tuning cycles.

At this level:

- CPU: Supports heavy preprocessing and parallel workloads, but GPU performance still dominates training speed.

- GPU: High-end NVIDIA GPUs with large VRAM capacity are critical.

- VRAM: 16GB or more provides the headroom needed for larger models and batch sizes.

- RAM: 32GB or more helps prevent memory pressure during complex workflows.

- Cooling and Power: Robust thermal design and sustained power delivery are essential to maintain performance over long runs.

Even at this level, many users combine local work with cloud GPUs to extend capability beyond laptop limits.

Do You Really Need to Train Models Locally?

In real-world use, many learners do not need to train models locally all the time. Cloud platforms offer access to powerful GPUs without heat, noise, or hardware limitations, making them ideal for learning and experimentation.

Local training becomes important when working offline, running long experiments without session limits, or building custom workflows that cloud environments cannot handle efficiently. Understanding this distinction early helps set realistic expectations.

Common Buying Mistakes to Avoid

Certain mistakes consistently lead to poor experiences:

- Choosing laptops labeled “AI” without verifying GPU and CUDA support

- Prioritizing thin designs that cannot sustain heavy workloads

- Focusing on CPU performance while ignoring memory and VRAM limits

- Underestimating how quickly GPU memory fills during training

- Assuming all operating systems offer equal support for ML tools

Avoiding these mistakes often has a greater impact than selecting the newest hardware.

Frequently Asked Questions

Do I need a high-end GPU like RTX 5090 for deep learning?

No. Most students and beginners do not need top-end GPUs. An RTX 4070 or RTX 4080 with enough VRAM is sufficient for learning and most local deep learning projects. Very high-end GPUs only make sense for large-scale research workloads.

Is 16GB of RAM enough for machine learning, or should I get 32GB?

16GB of RAM works for learning and small projects, but it fills up quickly with multiple datasets or notebooks. For smoother workflows and fewer slowdowns, 32GB is a better long-term choice if your budget allows.

Mac vs Windows: Which is better for machine learning and AI?

Macs are good for coding, experimentation, and lightweight AI tasks. However, most deep learning frameworks rely on NVIDIA CUDA, which works best on Windows or Linux laptops with RTX GPUs for local model training.

Are gaming laptops good for machine learning and AI?

Yes, absolutely. Gaming laptops often come with powerful GPUs and cooling systems, which is absolutely essential for machine learning.

The only drawback? They can be heavy, noisy, and don’t have great battery life. But if you want great performance without the need for a full-fledged workstation, a gaming laptop is a good option.

Can students use budget laptops for machine learning?

Yes, but with some limitations. A budget laptop can run small projects, do a little coding, and maybe even handle lightweight models. But once you start working with large datasets, you’ll quickly run into problems.

For students, a budget laptop is fine to learn the basics, but for serious projects, you’ll eventually need a laptop with a stronger GPU and more memory.

Is an NPU mandatory for machine learning or AI laptops?

No. NPUs are not mandatory for machine learning or deep learning. They are designed for lightweight AI tasks like background effects and on-device assistants. For local model training, an NVIDIA GPU with CUDA support is far more important.

Can AI or Copilot+ laptops replace GPU-based ML laptops?

No. AI or Copilot+ laptops are useful for productivity and AI-assisted features, but they cannot replace NVIDIA GPU-based laptops for local machine learning training. They are best used for learning, coding, and cloud-based workflows.

Final Words

While testing and reviewing these laptops, one thing stayed consistent: machine learning workloads quickly expose weak hardware choices. Performance drops, memory limits, and heat issues appear much faster than most people expect.

The laptops selected here are based on how they handled those pressures in real use. Each option suits a different level of work, making it easier to choose a system that matches your projects without paying for power you don’t actually need.