Being fast is vital in today’s world of computers and the internet; Cache Memory plays a crucial role. When you use a website, watch a video online, or use programs on your computer, you want them to work quickly, without any delays.

One of the unsung heroes behind this lightning-fast performance is the cache.

In this article, we’ll look into the world of cache memory, simplify its purpose, and explore how it can significantly boost the computer’s speed.

What is Cache Memory?

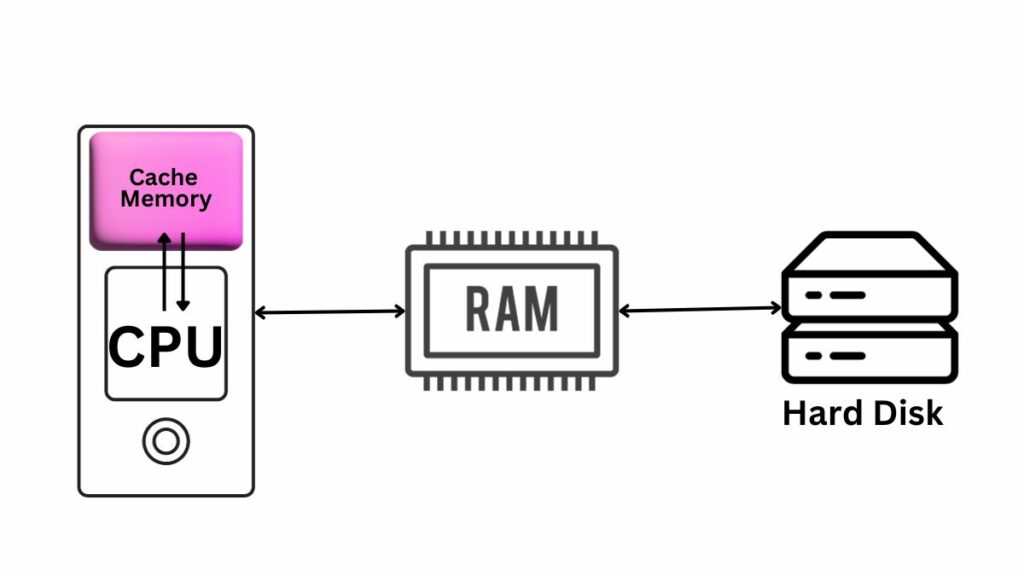

Cache memory, often called “cache,” is a super-speedy helper for your computer’s CPU. It’s a tiny but lightning-fast memory that keeps important stuff the CPU needs close by. Think of Cache as an intermediary between the CPU and the regular memory (RAM).

This cache is made of SRAM, faster than the main memory (DRAM), because it doesn’t need constant refreshing.

Why is cache so important?

Well, it makes your computer much faster. Instead of the CPU waiting a long time to get data from regular memory, it can grab it quickly from the cache.

Cache memory is intelligent, too. It knows the CPU likes to use the same stuff often, so it keeps those things handy. It’s like keeping your favorite book on your desk because you read it often.

Types of Cache Memory (L1, L2, L3)

Cache memory is organized into multiple levels, typically called L1, L2, and L3. Each cache level serves a specific purpose and is designed to provide faster CPU data access.

1. L1 Cache (Level 1 Cache)

L1 cache is the closest and fastest cache memory to the CPU. It is typically divided into separate instruction cache (L1i) and data cache (L1d).

The instruction cache holds frequently accessed program instructions, while the data cache stores frequently used data.

L1 cache has the lowest latency and fastest access speeds but a smaller capacity than higher-level caches.

2. L2 Cache (Level 2 Cache)

The L2 cache is the second level of cache memory in the memory hierarchy. It is larger compared to the L1 cache and is located further away from the CPU.

L2 cache is a middle ground between the faster L1 cache and the slower main memory (RAM). It helps bridge the speed gap between the CPU and main memory, providing faster access times than the main memory but slower than the L1 cache.

3. L3 Cache (Level 3 Cache)

L3 cache is the third level of cache memory and is optional in some computer architectures. It is larger in size than both the L1 and L2 cache and is shared among multiple CPU cores or processors.

L3 cache helps improve system performance by reducing conflicts and improving cache coherency in multi-core or multi-processor systems. It offers faster access speeds than the main memory but slower than the L1 and L2 cache.

How Cache Memory Works

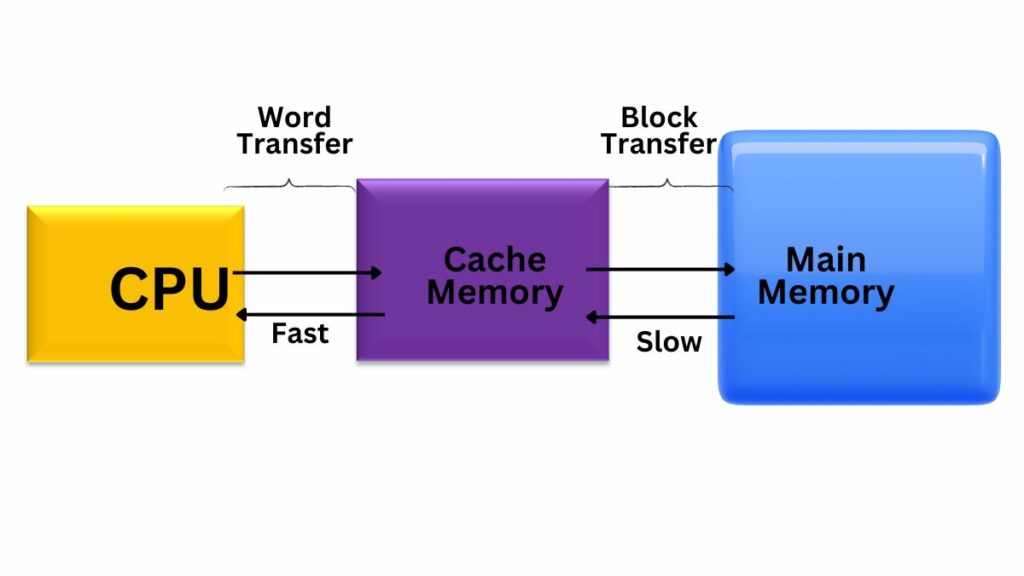

Cache memory works by storing copies of frequently accessed data and instructions in a faster and closer memory location than the main memory.

When the CPU needs to access data, it first checks the cache. If the data is found in the cache (known as a cache hit), it can be retrieved quickly, avoiding slower access to the main memory.

Cache memory operates based on the principle of locality, that is, Temporal Locality and Spatial Locality, which states that recently accessed data is likely to be accessed again in the near future.

Cache memory is organized into multiple levels, usually called L1, L2, and sometimes L3. If the requested data is not found in the L1 cache, the CPU checks the next level, the L2 cache, which is larger but slower. If the data is still not found, the CPU checks the next level, and so on, until it reaches the main memory.

The cache uses various algorithms and techniques to determine which data to store in the cache. These include the use of cache tags, cache lines, and replacement policies like least recently used (LRU) or random replacement.

The Importance of Cache Memory in Computing

Cache memory is an integral component in computing systems due to its significant impact on overall performance and efficiency.

The key reasons why cache memory is crucial:

1. Improved Data Access Speed

Cache memory stores frequently accessed data and instructions, allowing quicker access than fetching from the main memory. This reduces the time it takes for the CPU to retrieve information, resulting in faster processing speeds.

2. Reduced Memory Latency

Cache memory helps mitigate the latency of accessing data from the main memory. By having frequently used data readily available in the cache, the CPU can access it more quickly, minimizing the time spent waiting for data retrieval and reducing overall latency.

3. Enhanced CPU Utilization

With cache memory, the CPU can keep processing instructions without idle, as frequently accessed data is readily available. This increases CPU utilization and ensures the processor consistently executes tasks, improving overall system performance.

4. Lower Memory Traffic

Cache memory saves often-used data, cutting down on main memory access. This eases system memory usage, allowing for other tasks and boosting data transfer efficiency.

5. Effective Multitasking

Cache memory helps with multitasking by saving data from different apps or tasks. This lets the CPU switch between tasks fast, making your computer responsive and able to handle many things simultaneously.

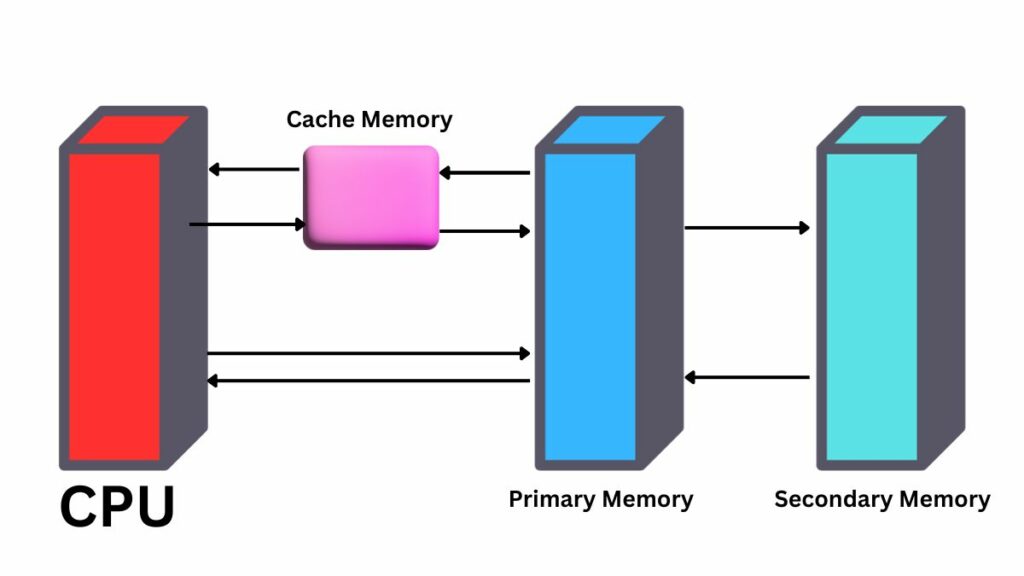

Relationship between Cache, RAM, and Storage Devices

| Component | Characteristics |

|---|---|

| Cache Memory | – Small and fast memory located close to the CPU. |

| – Stores frequently accessed data and instructions from RAM. | |

| – Faster access times compared to RAM, aiding quick data retrieval for the CPU. | |

| – Different levels (e.g., L1, L2, L3) with varying capacities and access speeds. | |

| – Operates on the principle of locality to exploit data access patterns. | |

| RAM (Main Memory) | – Larger in capacity than cache memory. |

| – Stores actively used data and instructions for running applications. | |

| – Slower than cache memory but faster than storage devices. | |

| – Provides temporary storage for data that the CPU can access quickly. | |

| – Contents are volatile; data is lost when the computer is powered off or restarted. | |

| Storage Devices | – Provides long-term storage for data, programs, and files. |

| – Much larger capacities compared to cache and RAM. | |

| – Offers non-volatile storage; data is retained even when powered by the computer. | |

| – Accessing data from storage devices is slower than cache and RAM. | |

| – Hard drives have mechanical components and are relatively slower; SSDs use flash memory and are faster. |

Cache Size and its Impact on System Performance:

The size of cache memory has a direct impact on system performance. Larger cache sizes allow for a higher hit rate, meaning more data and instructions can be stored in the cache, reducing the frequency of cache misses.

However, increasing cache size also increases the cost of manufacturing and the complexity of the memory hierarchy. Therefore, cache size is a trade-off between cost, power consumption, and performance. CPU designers aim to strike a balance by determining the optimal cache sizes for different applications and target markets.

Cache memory is essential in modern CPUs, including those from Intel, AMD, and ARM. The cache configurations can vary but typically include multiple levels of cache (L1, L2, L3).

Real-World Applications of Cache Memory

1. Web Browsing and Page Caching

Cache memory speeds up web browsing by storing often-used web pages and resources. This means faster page loading and a smoother browsing experience, as it reduces the need to fetch data from the web server every time you visit a page.

2. Video Streaming and Buffering

Cache memory is crucial in video streaming. It temporarily stores parts of the video, ensuring smooth playback. By buffering in the cache, it reduces the need for constant data streaming from the server, minimizing buffering interruptions and ensuring uninterrupted viewing.

3. Database management and caching techniques:

Cache memory is used in database systems to speed up data access. It stores frequently accessed records, indexes, or query results to make future retrievals faster. By doing this, the cache reduces the time it takes to get data from disk storage, making the database work faster overall.

4. Gaming performance optimization

Cache memory is used in gaming to boost performance. It stores often-used game assets like textures and audio files. This allows the system to load and display them quickly, making games look better, load faster, and play smoother.

5. Mobile applications and responsiveness

Cache is used in mobile apps to make them more responsive and save data. It stores commonly used app data like images and preferences. This helps the app show content quickly, even offline or with a weak network. Using cache memory, mobile apps can give users a smooth experience while using less data.

6. Content delivery networks (CDNs)

Cache memory is crucial in content delivery networks (CDNs). CDNs copy content to many cache servers around the world.

The closest CDN server gives it from its cache when a user wants something. This makes content load faster and reduces delays from the main server. Cache memory in CDNs makes content delivery faster and more scalable.

Future Trends in Cache Memory

Future trends in cache memory include advances in cache technology, such as 3D stacking and non-volatile cache, and cache integration in emerging technologies like quantum computing and AI accelerators.

1. Advances in cache technology

3D stacking:

To meet the need for more cache space and better performance, 3D stacking stacks cache layers vertically. This boosts capacity while saving space and brings the cache closer to the processor for faster data access and lower latency.

Non-volatile cache

Unlike a traditional volatile cache, which loses data during a power outage, a non-volatile cache keeps data intact. This speeds up system startup time, prevents data loss, and makes the system more energy efficient.

2. Cache Memory in emerging technologies

Quantum computing

Quantum computers are super advanced and need special cache memory that can handle their unique features like superposition and entanglement.

AI accelerators

We use special hardware called AI accelerators for super-fast AI and machine learning. Adding cache memory to them makes AI work even better by speeding up how it uses data.

Final Words

Cache memory is a crucial component that helps improve a computer’s speed by keeping frequently used data close to the processor, reducing the time it takes to access that data.

It’s often overlooked but plays a significant role in enhancing overall computer performance. So, thanking cache for making your computer faster validly acknowledges its importance.

FAQs

Yes, it’s okay to clear the cache on apps. It can help free up storage space and sometimes resolve issues, but it may temporarily slow some apps the next time you use them as they rebuild their cache.

Cache takes up storage because it stores temporary data like images and files from websites and apps to make them load faster when you revisit them. Over time, this cached data can accumulate and consume storage space.

Yes, you need Windows cache files. They help improve the speed and performance of your computer by storing frequently accessed data. Deleting them can sometimes free up space but might slow down your system temporarily.

Cache memory is faster than RAM due to its proximity to the CPU and smaller size. Cache is a high-speed memory that stores frequently accessed data and instructions from the RAM. It acts as a buffer between the CPU and RAM, allowing quicker data access.

Cache is larger but slower than registers. Registers are the smallest and fastest memory within a CPU, used for storing and managing data during immediate processing.

Cache memory is a bit larger but still faster than RAM and serves as a bridge between registers and RAM, storing frequently used data to speed up CPU operations.

Cache memory size is limited because it’s more expensive and takes up more space on the CPU chip.

To balance cost, physical size, and performance, computers use a hierarchy of memory types, with larger but slower memory (like RAM) complemented by smaller but faster cache memory.

Yes, a computer can run without a cache memory chip, but its performance may be significantly slower because cache helps speed up data access for the CPU.