NVIDIA and SK hynix have expanded their AI hardware collaboration beyond high-bandwidth memory, moving into joint development of AI-focused solid-state drives designed specifically for inference workloads.

According to multiple industry reports, the companies are working on storage technology intended to remove performance bottlenecks that GPUs and HBM alone can no longer solve. The effort reflects a broader shift in how AI systems are being designed as inference workloads continue to scale.

The project is internally referred to as “Storage Next” at NVIDIA and “AI-N P” at SK hynix. The long-term goal, according to the reports, is to achieve ten times the performance of existing enterprise SSDs, targeting 100 million IOPS by 2027.

Storage Emerging as a Bottleneck in AI Inference

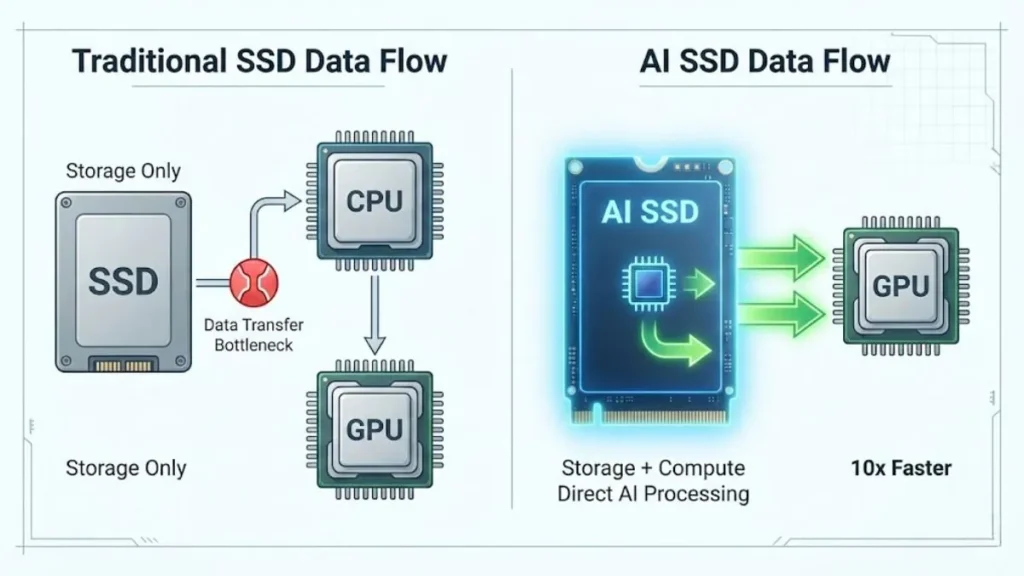

While recent advancements in AI hardware have primarily focused on GPUs and high-bandwidth memory, inference workloads place unique demands on system architecture. According to Chosun Biz, large language models require constant access to massive parameter sets, user context, and external data sources that often exceed the capacity of on-package memory.

SK hynix has revealed that advanced inference systems may require several terabytes of accessible data, forcing operators to rely on multi-GPU configurations. This increases latency, power consumption, and overall system costs, making storage performance a limiting factor in large-scale deployments.

AI SSDs Positioned as a New System Layer

According to Biztalktalk, SK hynix is designing the upcoming AI SSDs as a pseudo-memory layer that sits between system DRAM and long-term storage. Unlike traditional enterprise SSDs, these drives are being optimized at both the NAND architecture and controller levels to support the high-frequency data access patterns common in inference workloads.

The goal is to reduce GPU idle time by improving data availability rather than simply increasing raw compute power. Initial proof-of-concept testing is already underway, and a working prototype is expected during the next development phase.

Parallel Work on High-Bandwidth NAND with SanDisk

In parallel with its work with NVIDIA, SK hynix is also collaborating with SanDisk on high-bandwidth flash, a technology that applies a stacking concept similar to HBM while retaining the non-volatile characteristics of NAND. According to Chosun Biz, an alpha version of the high-bandwidth flash is expected next year, with customer evaluations planned for 2027.

Market projections cited in the same reports suggest that AI-focused NAND solutions could grow rapidly as inference workloads increase in cloud and enterprise environments.

Concerns Regarding Impact on NAND Supply

According to Wccftech, the shift towards storage designed for AI is already raising concerns in the semiconductor supply chain. Analysts warn that widespread adoption of AI SSDs could strain NAND flash production, similar to the supply shortages currently affecting DRAM and HBM.

Cloud service providers and AI companies are already consuming large quantities of memory and storage components, which could lead to reduced availability for traditional enterprise and consumer markets if capacity doesn’t increase accordingly.

Also Read: Best SSD Laptops

What This Means for AI Infrastructure

Overall, the reports indicate a significant shift in AI system design. Instead of relying solely on powerful GPUs and large memory pools, vendors are now viewing storage as a performance-critical component, especially for inference and personalized AI services.

If the performance targets outlined by NVIDIA and SK hynix are achieved, AI-optimized SSDs could reduce inference latency, lower operating costs, and make it easier to deploy large models at scale.

Sources:

Chosun Biz

Wccftech